API Documentation (Profanity & Toxicity Detection for User-generated Content / Hate Speech Detection for User-generated Content)

API Documentation -> Profanity & Toxicity Detection for User-generated Content

Here, we described input/output for Profanity & Toxicity Detection for User-generated Content / Hate Speech Detection for User-generated Content.

The API is very simple to use and does not require any linguistic or machine learning skills.

API is available via RapidAPI (link), so you need to apply RapidAPI Key that you get after you sign up.

If you have some other questions, need more information, or you need to get the On-Premise version with 0 cents / text (Big Data Compatible) of Profanity & Toxicity Detection for User-generated Content, contact us.

API Documentation for Profanity & Toxicity Detection for User-generated Content / Hate Speech Detection for User-generated Content

Profanity & Toxicity Detection for User-generated Content API / Hate Speech Detection for User-generated Content API consists of only one endpoint (method) - process. It allows you to process user-generated content (comments, reviews, forums, etc.) and detect toxic and aggressive content (profanity words, toxicity, obscene words, threats, insults, identity hates, and others).

process(title, text) [Endpoint]

Below, you will find the description of each field, both input, and output.

Input (POST):

- title (optional) - Title of a text/review/comment (UTF-8) that you want to process through API. If you do not have a title, leave it empty.

- text (required) - Text (UTF-8) that you want to process through API.

[Maximum length in total: 5000 characters.]

Example input (POST):

Let's have a look at how this API will process this example text input. Forgive us the language; we are just quoting creative users.

- text="You white idiot. I will find where you live. Beware of the dark."

- title="" (empty)

Output (JSON):

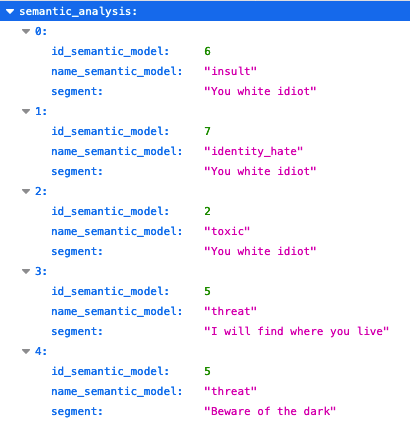

This API returns one section: semantic_analysis. It is a result of processing given input with a set of dedicated semantic models regarding toxic and aggressive content (profanity words, toxicity, obscene words, threats, insults, identity hates, and others).

semantic_analysis

- id_semantic_model - id of the detected Semantic Model. Integer.

- name_semantic_model - the name of the Semantic Model, varchar(50) it describes what this semantic model detects, e.g. .

- segment - Sentence from the text (or part of the sentence) in which the specific semantic model was detected. It might be a little bit modified (simplified) compared to the original text.

Example output:

In this example, 5 semantic models were detected. Each semantic model consist of their id (id_semantic_model), name (name_semantic_model), and a sentence / text segment in which it was detected (segment).

If you have some other questions regarding semantic_analysis output, need more information, or would like to modify semantic models for your needs/your data, contact us.

Example of a JSON API OUTPUT of Profanity & Toxicity Detection for User-generated Content API:

Above, we described each section of the output and displayed it in a more human-friendly form. Below, you will find the format of how our API returns JSON output. The example is the same as above.

Input (POST):

- text="You white idiot. I will find where you live. Beware of the dark."

- title: "" (empty)

Output (JSON):

Example of a JSON API OUTPUT

{

"semantic_analysis":{

"0":{

"id_semantic_model":2,

"name_semantic_model":"toxic",

"segment":"You white idiot"},

"1":{

"id_semantic_model":6,

"name_semantic_model":"insult",

"segment":"You white idiot"},

"2":{

"id_semantic_model":7,

"name_semantic_model":"identity_hate",

"segment":"You white idiot"},

"3":{

"id_semantic_model":5,

"name_semantic_model":"threat",

"segment":"I will find where you live"},

"4":{

"id_semantic_model":5,

"name_semantic_model":"threat",

"segment":"Beware of the dark"}}

}

What's next

Go to Rapid API and you can start using this API today:

- Profanity & Toxicity Detection for User-generated Content (RapidAPI link)

- Hate Speech Detection for User-generated Content (RapidAPI link)

- These APIs are very similar to each other and differ mainly by name.

Go to product site to get more description:

Semantic Analysis for Reviews APIs available on Rapid API:

- Semantic Analysis for Hotel Reviews (RapidAPI link)

- Semantic Analysis for Hostel Reviews (RapidAPI link)

- Semantic Analysis for Vacation Rental/Apartment Reviews (RapidAPI link)

If you have some other questions, need more information, or you need to get the On-Premise version with 0 cents / text (Big Data Compatible) of Semantic Analysis for Reviews, or other Language Understanding API for any texts, contact us.